Editor’s notice: This text, initially printed on March 13, 2023, has been up to date.

The mics have been reside and tape was rolling within the studio the place the Miles Davis Quintet was recording dozens of tunes in 1956 for Status Information.

When an engineer requested for the following track’s title, Davis shot again, “I’ll play it, and let you know what it’s later.”

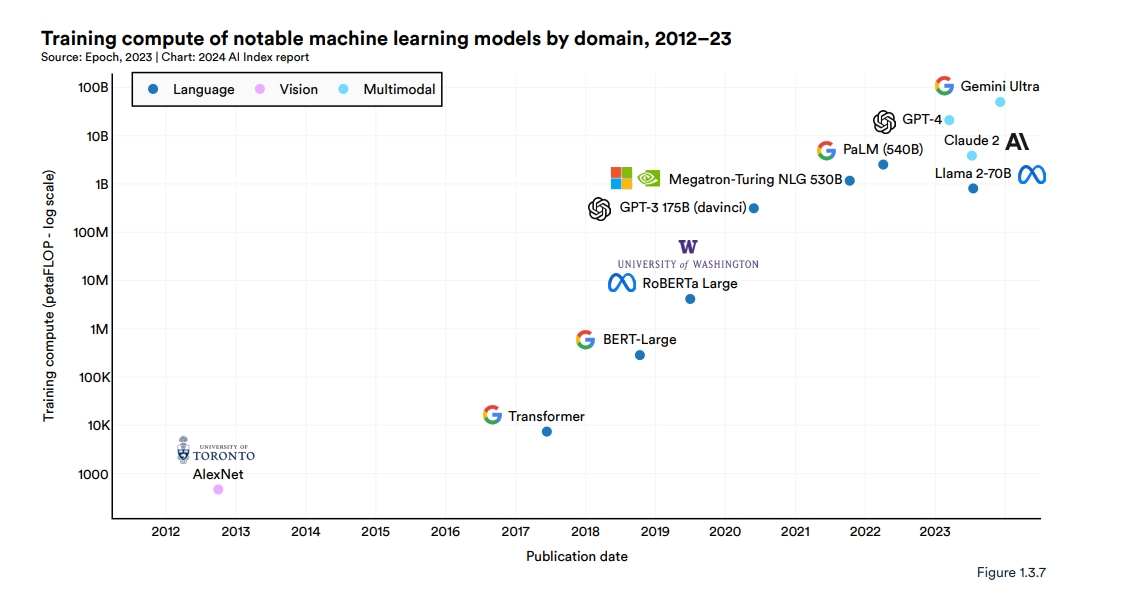

Just like the prolific jazz trumpeter and composer, researchers have been producing AI fashions at a feverish tempo, exploring new architectures and use circumstances. In response to the 2024 AI Index report from the Stanford Institute for Human-Centered Synthetic Intelligence, 149 basis fashions have been printed in 2023, greater than double the quantity launched in 2022.

They mentioned transformer fashions, giant language fashions (LLMs), imaginative and prescient language fashions (VLMs) and different neural networks nonetheless being constructed are a part of an vital new class they dubbed basis fashions.

Basis Fashions Outlined

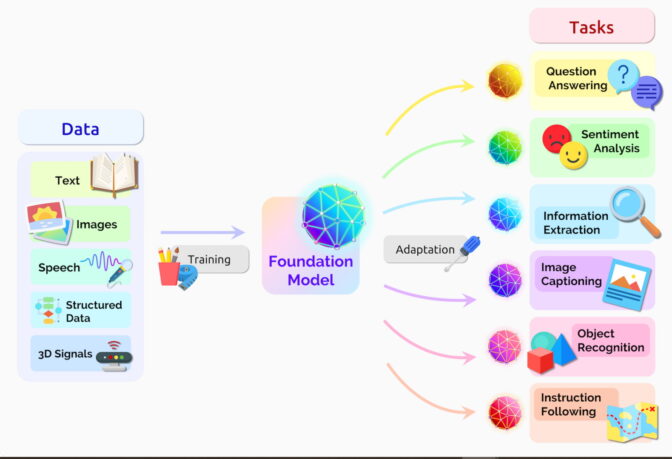

A basis mannequin is an AI neural community — educated on mountains of uncooked information, typically with unsupervised studying — that may be tailored to perform a broad vary of duties.

Two vital ideas assist outline this umbrella class: Information gathering is less complicated, and alternatives are as broad because the horizon.

No Labels, A lot of Alternative

Basis fashions typically be taught from unlabeled datasets, saving the time and expense of manually describing every merchandise in large collections.

Earlier neural networks have been narrowly tuned for particular duties. With just a little fine-tuning, basis fashions can deal with jobs from translating textual content to analyzing medical photographs to performing agent-based behaviors.

“I believe we’ve uncovered a really small fraction of the capabilities of current basis fashions, not to mention future ones,” mentioned Percy Liang, the middle’s director, within the opening speak of the first workshop on basis fashions.

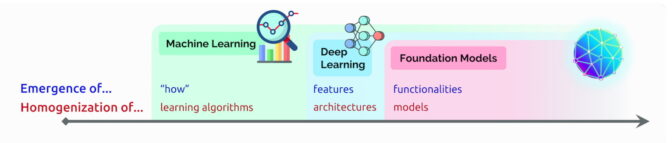

AI’s Emergence and Homogenization

In that speak, Liang coined two phrases to explain basis fashions:

Emergence refers to AI options nonetheless being found, akin to the various nascent expertise in basis fashions. He calls the mixing of AI algorithms and mannequin architectures homogenization, a development that helped type basis fashions. (See chart under.)

The sector continues to maneuver quick.

The sector continues to maneuver quick.

A 12 months after the group outlined basis fashions, different tech watchers coined a associated time period — generative AI. It’s an umbrella time period for transformers, giant language fashions, diffusion fashions and different neural networks capturing folks’s imaginations as a result of they will create textual content, photographs, music, software program, movies and extra.

Generative AI has the potential to yield trillions of {dollars} of financial worth, mentioned executives from the enterprise agency Sequoia Capital who shared their views in a latest AI Podcast.

A Transient Historical past of Basis Fashions

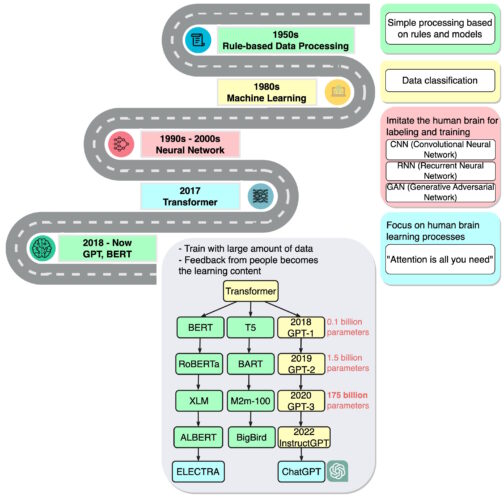

“We’re in a time the place easy strategies like neural networks are giving us an explosion of recent capabilities,” mentioned Ashish Vaswani, an entrepreneur and former senior workers analysis scientist at Google Mind who led work on the seminal 2017 paper on transformers.

That work impressed researchers who created BERT and different giant language fashions, making 2018 “a watershed second” for pure language processing, a report on AI mentioned on the finish of that 12 months.

Google launched BERT as open-source software program, spawning a household of follow-ons and setting off a race to construct ever bigger, extra highly effective LLMs. Then it utilized the know-how to its search engine so customers may ask questions in easy sentences.

In 2020, researchers at OpenAI introduced one other landmark transformer, GPT-3. Inside weeks, folks have been utilizing it to create poems, applications, songs, web sites and extra.

“Language fashions have a variety of helpful purposes for society,” the researchers wrote.

Their work additionally confirmed how giant and compute-intensive these fashions may be. GPT-3 was educated on a dataset with practically a trillion phrases, and it sports activities a whopping 175 billion parameters, a key measure of the ability and complexity of neural networks. In 2024, Google launched Gemini Extremely, a state-of-the-art basis mannequin that requires 50 billion petaflops.

“I simply bear in mind being type of blown away by the issues that it may do,” mentioned Liang, talking of GPT-3 in a podcast.

The most recent iteration, ChatGPT — educated on 10,000 NVIDIA GPUs — is much more partaking, attracting over 100 million customers in simply two months. Its launch has been known as the iPhone second for AI as a result of it helped so many individuals see how they may use the know-how.

Going Multimodal

Basis fashions have additionally expanded to course of and generate a number of information varieties, or modalities, akin to textual content, photographs, audio and video. VLMs are one kind of multimodal fashions that may perceive video, picture and textual content inputs whereas producing textual content or visible output.

Skilled on 355,000 movies and a pair of.8 million photographs,

Cosmos Nemotron 34B is a number one VLM that permits the power to question and summarize photographs and video from the bodily or digital world.

From Textual content to Photographs

About the identical time ChatGPT debuted, one other class of neural networks, known as diffusion fashions, made a splash. Their means to show textual content descriptions into inventive photographs attracted informal customers to create superb photographs that went viral on social media.

The primary paper to explain a diffusion mannequin arrived with little fanfare in 2015. However like transformers, the brand new approach quickly caught hearth.

In a tweet, Midjourney CEO David Holz revealed that his diffusion-based, text-to-image service has greater than 4.4 million customers. Serving them requires greater than 10,000 NVIDIA GPUs primarily for AI inference, he mentioned in an interview (subscription required).

Towards Fashions That Perceive the Bodily World

The following frontier of synthetic intelligence is bodily AI, which allows autonomous machines like robots and self-driving vehicles to work together with the actual world.

AI efficiency for autonomous automobiles or robots requires intensive coaching and testing. To make sure bodily AI methods are protected, builders want to coach and take a look at their methods on large quantities of information, which may be pricey and time-consuming.

World basis fashions, which may simulate real-world environments and predict correct outcomes primarily based on textual content, picture, or video enter, provide a promising resolution.

Bodily AI growth groups are utilizing NVIDIA Cosmos world basis fashions, a set of pre-trained autoregressive and diffusion fashions educated on 20 million hours of driving and robotics information, with the NVIDIA Omniverse platform to generate large quantities of controllable, physics-based artificial information for bodily AI. Awarded the Finest AI And Finest General Awards at CES 2025, Cosmos world basis fashions are open fashions that may be custom-made for downstream use circumstances or enhance precision on a selected job utilizing use case-specific information.

Dozens of Fashions in Use

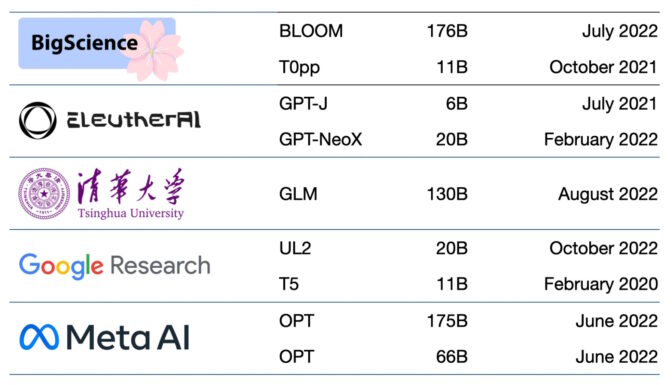

Tons of of basis fashions at the moment are accessible. One paper catalogs and classifies greater than 50 main transformer fashions alone (see chart under).

The Stanford group benchmarked 30 basis fashions, noting the sector is shifting so quick they didn’t overview some new and distinguished ones.

Startup NLP Cloud, a member of the NVIDIA Inception program that nurtures cutting-edge startups, says it makes use of about 25 giant language fashions in a industrial providing that serves airways, pharmacies and different customers. Specialists count on {that a} rising share of the fashions might be made open supply on websites like Hugging Face’s mannequin hub.

Basis fashions preserve getting bigger and extra advanced, too.

That’s why — moderately than constructing new fashions from scratch — many companies are already customizing pretrained basis fashions to turbocharge their journeys into AI, utilizing on-line companies like NVIDIA AI Basis Fashions.

The accuracy and reliability of generative AI is growing due to methods like retrieval-augmented technology, aka RAG, that lets basis fashions faucet into exterior assets like a company information base.

AI Foundations for Enterprise

One other new framework, the NVIDIA NeMo framework, goals to let any enterprise create its personal billion- or trillion-parameter transformers to energy customized chatbots, private assistants and different AI purposes.

It created the 530-billion parameter Megatron-Turing Pure Language Technology mannequin (MT-NLG) that powers TJ, the Toy Jensen avatar that gave a part of the keynote at NVIDIA GTC final 12 months.

Basis fashions — related to 3D platforms like NVIDIA Omniverse — might be key to simplifying growth of the metaverse, the 3D evolution of the web. These fashions will energy purposes and property for leisure and industrial customers.

Factories and warehouses are already making use of basis fashions inside digital twins, life like simulations that assist discover extra environment friendly methods to work.

Basis fashions can ease the job of coaching autonomous automobiles and robots that help people on manufacturing facility flooring and logistics facilities. Additionally they assist practice autonomous automobiles by creating life like environments just like the one under.

New makes use of for basis fashions are rising every day, as are challenges in making use of them.

A number of papers on basis and generative AI fashions describing dangers akin to:

- amplifying bias implicit within the large datasets used to coach fashions,

- introducing inaccurate or deceptive data in photographs or movies, and

- violating mental property rights of current works.

“Provided that future AI methods will doubtless rely closely on basis fashions, it’s crucial that we, as a group, come collectively to develop extra rigorous rules for basis fashions and steerage for his or her accountable growth and deployment,” mentioned the Stanford paper on basis fashions.

Present concepts for safeguards embody filtering prompts and their outputs, recalibrating fashions on the fly and scrubbing large datasets.

“These are points we’re engaged on as a analysis group,” mentioned Bryan Catanzaro, vp of utilized deep studying analysis at NVIDIA. “For these fashions to be actually extensively deployed, we’ve to take a position loads in security.”

It’s yet one more area AI researchers and builders are plowing as they create the long run.