Within the age of AI reasoning, coaching smarter, extra succesful fashions is crucial to scaling intelligence. Delivering the huge efficiency to fulfill this new age requires breakthroughs throughout GPUs, CPUs, NICs, scale-up and scale-out networking, system architectures, and mountains of software program and algorithms.

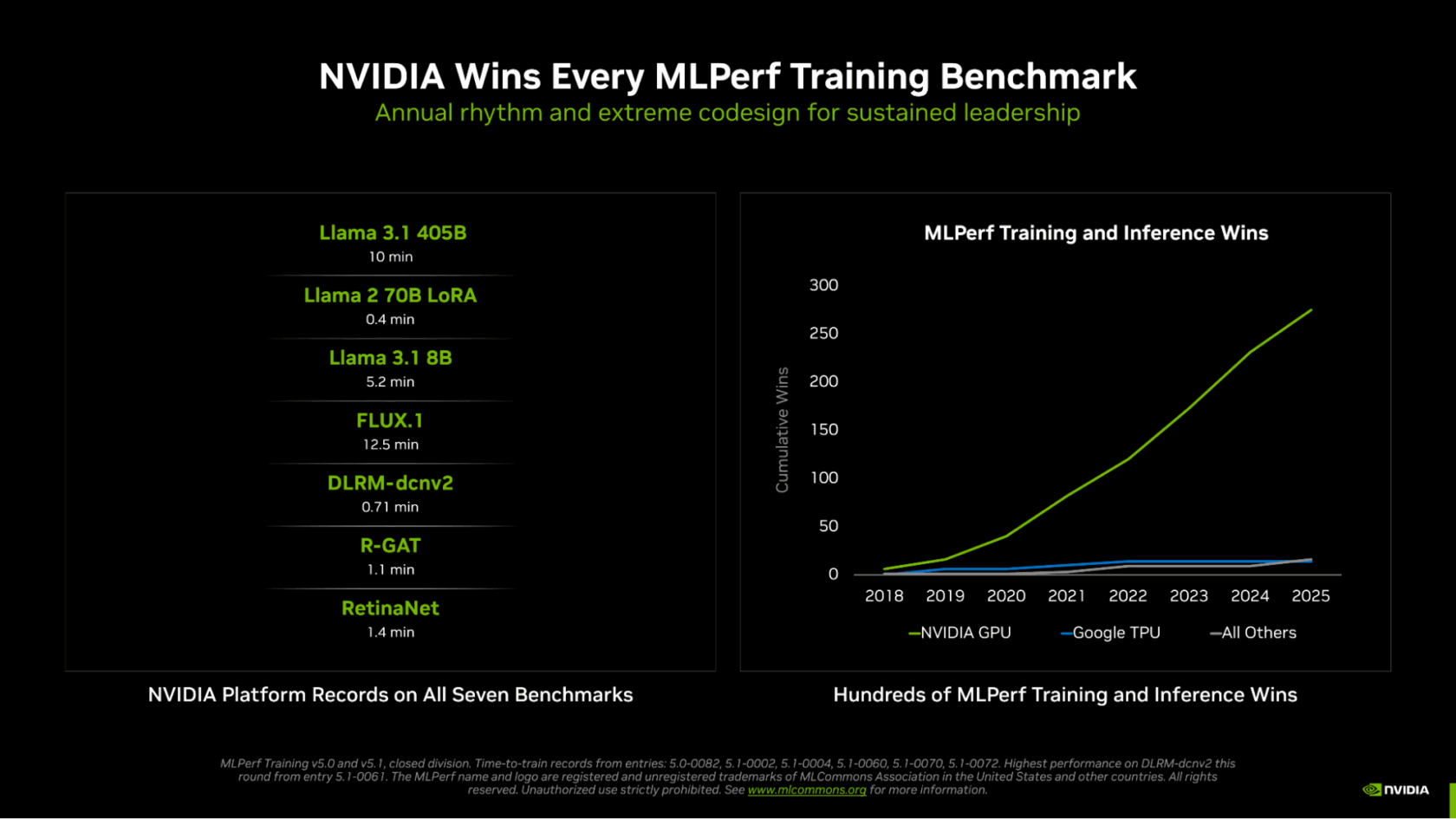

In MLPerf Coaching v5.1 — the newest spherical in a long-running collection of industry-standard assessments of AI coaching efficiency — NVIDIA swept all seven assessments, delivering the quickest time to coach throughout giant language fashions (LLMs), picture technology, recommender methods, pc imaginative and prescient and graph neural networks.

NVIDIA was additionally the one platform to submit outcomes on each take a look at, underscoring the wealthy programmability of NVIDIA GPUs, and the maturity and flexibility of its CUDA software program stack.

NVIDIA Blackwell Extremely Doubles Down

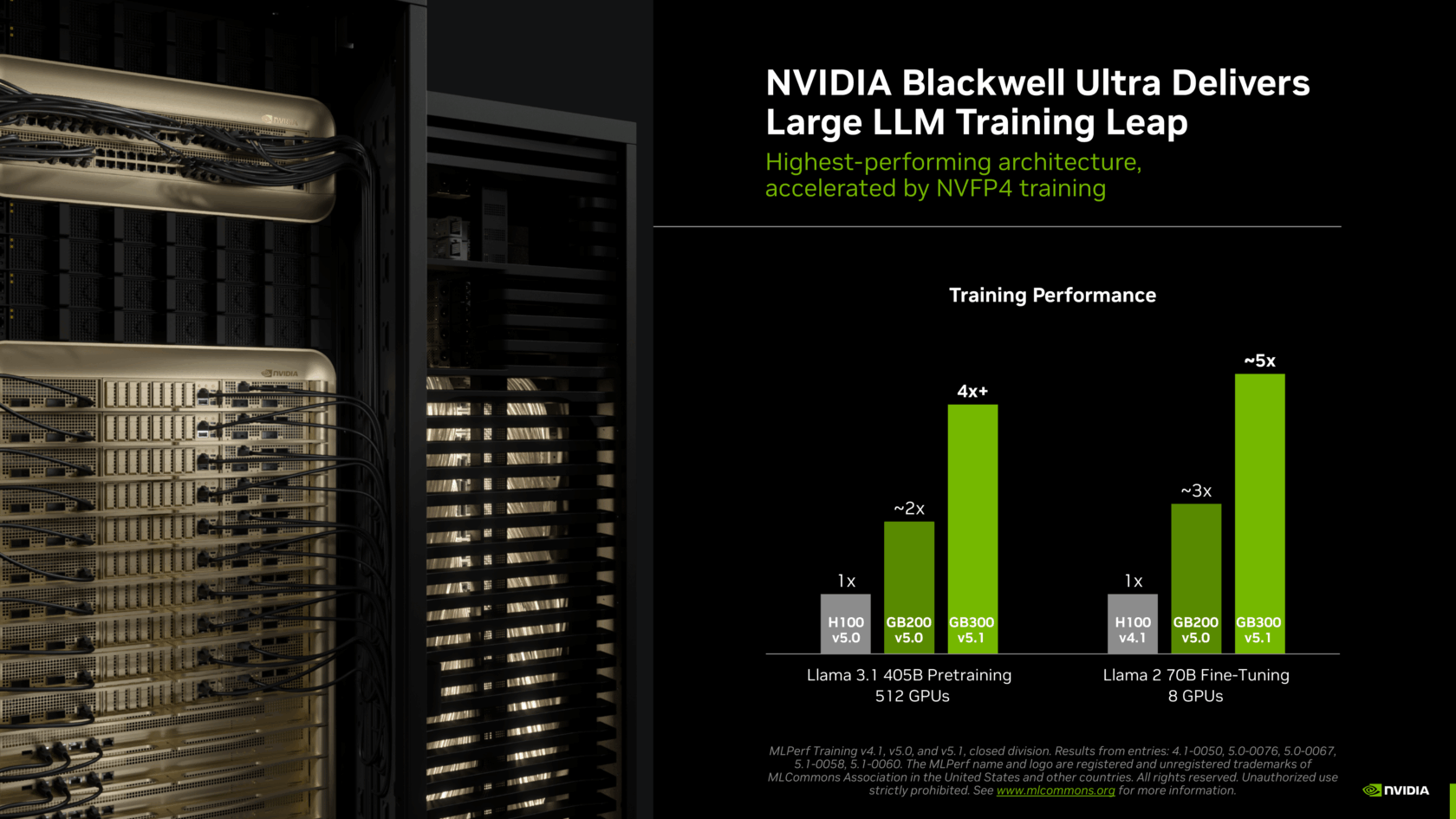

The GB300 NVL72 rack-scale system, powered by the NVIDIA Blackwell Extremely GPU structure, made its debut in MLPerf Coaching this spherical, following a record-setting displaying within the most up-to-date MLPerf Inference spherical.

In contrast with the prior-generation Hopper structure, the Blackwell Extremely-based GB300 NVL72 delivered greater than 4x the Llama 3.1 405B pretraining and practically 5x the Llama 2 70B LoRA fine-tuning efficiency utilizing the identical variety of GPUs.

These positive factors have been fueled by Blackwell Extremely’s architectural enhancements — together with new Tensor Cores that supply 15 petaflops of NVFP4 AI compute, twice the attention-layer compute and 279GB of HBM3e reminiscence — in addition to new coaching strategies that tapped into the structure’s monumental NVFP4 compute efficiency.

Connecting a number of GB300 NVL72 methods, the NVIDIA Quantum-X800 InfiniBand platform — the {industry}’s first end-to-end 800 Gb/s networking platform — additionally made its MLPerf debut, doubling scale-out networking bandwidth in contrast with the prior technology.

Efficiency Unlocked: NVFP4 Accelerates LLM Coaching

Key to the excellent outcomes this spherical was performing calculations utilizing NVFP4 precision — a primary within the historical past of MLPerf Coaching.

One option to enhance compute efficiency is to construct an structure able to performing computations on information represented with fewer bits, after which to carry out these calculations at a sooner charge. Nevertheless, decrease precision means much less info is offered in every calculation. This implies utilizing low-precision calculations within the coaching course of requires cautious design selections to maintain outcomes correct.

NVIDIA groups innovated at each layer of the stack to undertake FP4 precision for LLM coaching. The NVIDIA Blackwell GPU can carry out FP4 calculations — together with the NVIDIA-designed NVFP4 format in addition to different FP4 variants — at double the speed of FP8. Blackwell Extremely boosts that to 3x, enabling the GPUs to ship considerably better AI compute efficiency.

NVIDIA is the one platform thus far that has submitted MLPerf Coaching outcomes with calculations carried out utilizing FP4 precision whereas assembly the benchmark’s strict accuracy necessities.

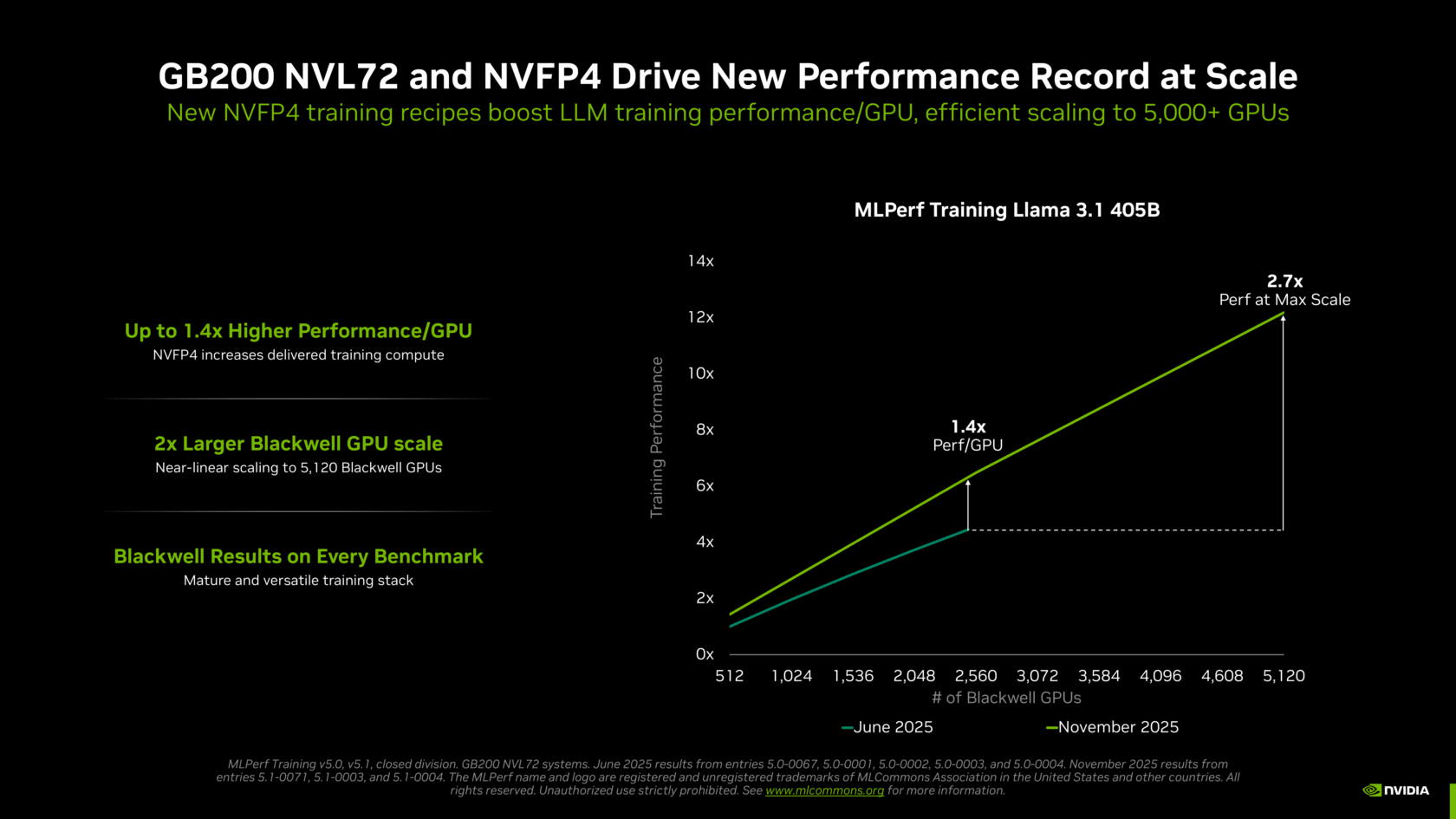

NVIDIA Blackwell Scales to New Heights

NVIDIA set a brand new Llama 3.1 405B time-to-train file of simply 10 minutes, powered by greater than 5,000 Blackwell GPUs working collectively effectively. This entry was 2.7x sooner than the very best Blackwell-based outcome submitted within the prior spherical, ensuing from environment friendly scaling to greater than twice the variety of GPUs, in addition to using NVFP4 precision to dramatically enhance the efficient efficiency of every Blackwell GPU.

For instance the efficiency enhance per GPU, NVIDIA submitted outcomes this spherical utilizing 2,560 Blackwell GPUs, reaching a time to coach of 18.79 minutes — 45% sooner than the submission final spherical utilizing 2,496 GPUs.

New Benchmarks, New Information

NVIDIA additionally set efficiency data on the 2 new benchmarks added this spherical: Llama 3.1 8B and FLUX.1.

Llama 3.1 8B — a compact but extremely succesful LLM — changed the long-running BERT-large mannequin, including a contemporary, smaller LLM to the benchmark suite. NVIDIA submitted outcomes with as much as 512 Blackwell Extremely GPUs, setting the bar at 5.2 minutes to coach.

As well as, FLUX.1 — a state-of-the-art picture technology mannequin — changed Secure Diffusion v2, with solely the NVIDIA platform submitting outcomes on the benchmark. NVIDIA submitted outcomes utilizing 1,152 Blackwell GPUs, setting a file time to coach of 12.5 minutes.

NVIDIA continued to carry data on the prevailing graph neural community, object detection and recommender system assessments.

A Broad and Deep Companion Ecosystem

The NVIDIA ecosystem participated extensively this spherical, with compelling submissions from 15 organizations together with ASUSTeK, Dell Applied sciences, Giga Computing, Hewlett Packard Enterprise, Krai, Lambda, Lenovo, Nebius, Quanta Cloud Know-how, Supermicro, College of Florida, Verda (previously DataCrunch) and Wiwynn.

NVIDIA is innovating at a one-year rhythm, driving important and fast efficiency will increase throughout pretraining, post-training and inference — paving the best way to new ranges of intelligence and accelerating AI adoption.

See extra NVIDIA efficiency information on the Information Heart Deep Studying Product Efficiency Hub and Efficiency Explorer pages.