NVIDIA at the moment introduced Nemotron-4 340B, a household of open fashions that builders can use to generate artificial knowledge for coaching giant language fashions (LLMs) for industrial functions throughout healthcare, finance, manufacturing, retail and each different trade.

Excessive-quality coaching knowledge performs a essential position within the efficiency, accuracy and high quality of responses from a customized LLM — however strong datasets could be prohibitively costly and tough to entry.

By a uniquely permissive open mannequin license, Nemotron-4 340B provides builders a free, scalable solution to generate artificial knowledge that may assist construct highly effective LLMs.

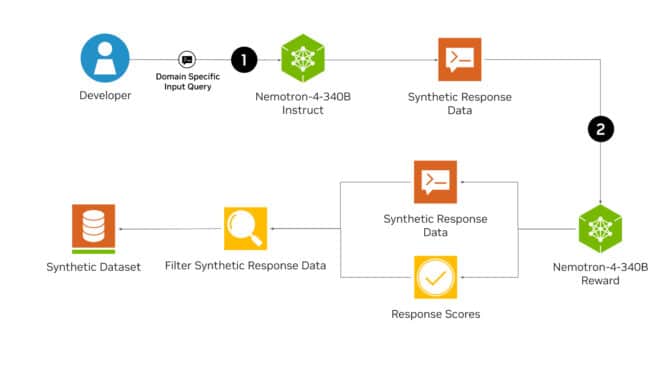

The Nemotron-4 340B household contains base, instruct and reward fashions that kind a pipeline to generate artificial knowledge used for coaching and refining LLMs. The fashions are optimized to work with NVIDIA NeMo, an open-source framework for end-to-end mannequin coaching, together with knowledge curation, customization and analysis. They’re additionally optimized for inference with the open-source NVIDIA TensorRT-LLM library.

Nemotron-4 340B could be downloaded now from Hugging Face. Builders will quickly have the ability to entry the fashions at ai.nvidia.com, the place they’ll be packaged as an NVIDIA NIM microservice with a typical software programming interface that may be deployed wherever.

Navigating Nemotron to Generate Artificial Knowledge

LLMs might help builders generate artificial coaching knowledge in eventualities the place entry to giant, numerous labeled datasets is restricted.

The Nemotron-4 340B Instruct mannequin creates numerous artificial knowledge that mimics the traits of real-world knowledge, serving to enhance knowledge high quality to extend the efficiency and robustness of customized LLMs throughout varied domains.

Then, to spice up the standard of the AI-generated knowledge, builders can use the Nemotron-4 340B Reward mannequin to filter for high-quality responses. Nemotron-4 340B Reward grades responses on 5 attributes: helpfulness, correctness, coherence, complexity and verbosity. It’s presently first place on the Hugging Face RewardBench leaderboard, created by AI2, for evaluating the capabilities, security and pitfalls of reward fashions.

Researchers may also create their very own instruct or reward fashions by customizing the Nemotron-4 340B Base mannequin utilizing their proprietary knowledge, mixed with the included HelpSteer2 dataset.

High-quality-Tuning With NeMo, Optimizing for Inference With TensorRT-LLM

Utilizing open-source NVIDIA NeMo and NVIDIA TensorRT-LLM, builders can optimize the effectivity of their instruct and reward fashions to generate artificial knowledge and to attain responses.

All Nemotron-4 340B fashions are optimized with TensorRT-LLM to make the most of tensor parallelism, a sort of mannequin parallelism by which particular person weight matrices are cut up throughout a number of GPUs and servers, enabling environment friendly inference at scale.

Nemotron-4 340B Base, educated on 9 trillion tokens, could be custom-made utilizing the NeMo framework to adapt to particular use circumstances or domains. This fine-tuning course of advantages from in depth pretraining knowledge and yields extra correct outputs for particular downstream duties.

A wide range of customization strategies can be found by way of the NeMo framework, together with supervised fine-tuning and parameter-efficient fine-tuning strategies akin to low-rank adaptation, or LoRA.

To spice up mannequin high quality, builders can align their fashions with NeMo Aligner and datasets annotated by Nemotron-4 340B Reward. Alignment is a key step in coaching LLMs, the place a mannequin’s conduct is fine-tuned utilizing algorithms like reinforcement studying from human suggestions (RLHF) to make sure its outputs are secure, correct, contextually applicable and in line with its meant targets.

Companies in search of enterprise-grade assist and safety for manufacturing environments may also entry NeMo and TensorRT-LLM by way of the cloud-native NVIDIA AI Enterprise software program platform, which gives accelerated and environment friendly runtimes for generative AI basis fashions.

Evaluating Mannequin Safety and Getting Began

The Nemotron-4 340B Instruct mannequin underwent in depth security analysis, together with adversarial checks, and carried out properly throughout a variety of threat indicators. Customers ought to nonetheless carry out cautious analysis of the mannequin’s outputs to make sure the synthetically generated knowledge is appropriate, secure and correct for his or her use case.

For extra info on mannequin safety and security analysis, learn the mannequin card.

Obtain Nemotron-4 340B fashions through Hugging Face. For extra particulars, learn the analysis papers on the mannequin and dataset.

See discover concerning software program product info.