2025 marked a breakout 12 months for AI improvement on PC.

PC-class small language fashions (SLMs) improved accuracy by almost 2x over 2024, dramatically closing the hole with frontier cloud-based giant language fashions (LLMs). AI PC developer instruments together with Ollama, ComfyUI, llama.cpp and Unsloth have matured, their reputation has doubled 12 months over 12 months and the variety of customers downloading PC-class fashions grew tenfold from 2024.

These developments are paving the best way for generative AI to achieve widespread adoption amongst on a regular basis PC creators, players and productiveness customers this 12 months.

At CES this week, NVIDIA is saying saying a wave of AI upgrades for GeForce RTX, NVIDIA RTX PRO and NVIDIA DGX Spark units that unlock the efficiency and reminiscence wanted for builders to deploy generative AI on PC, together with:

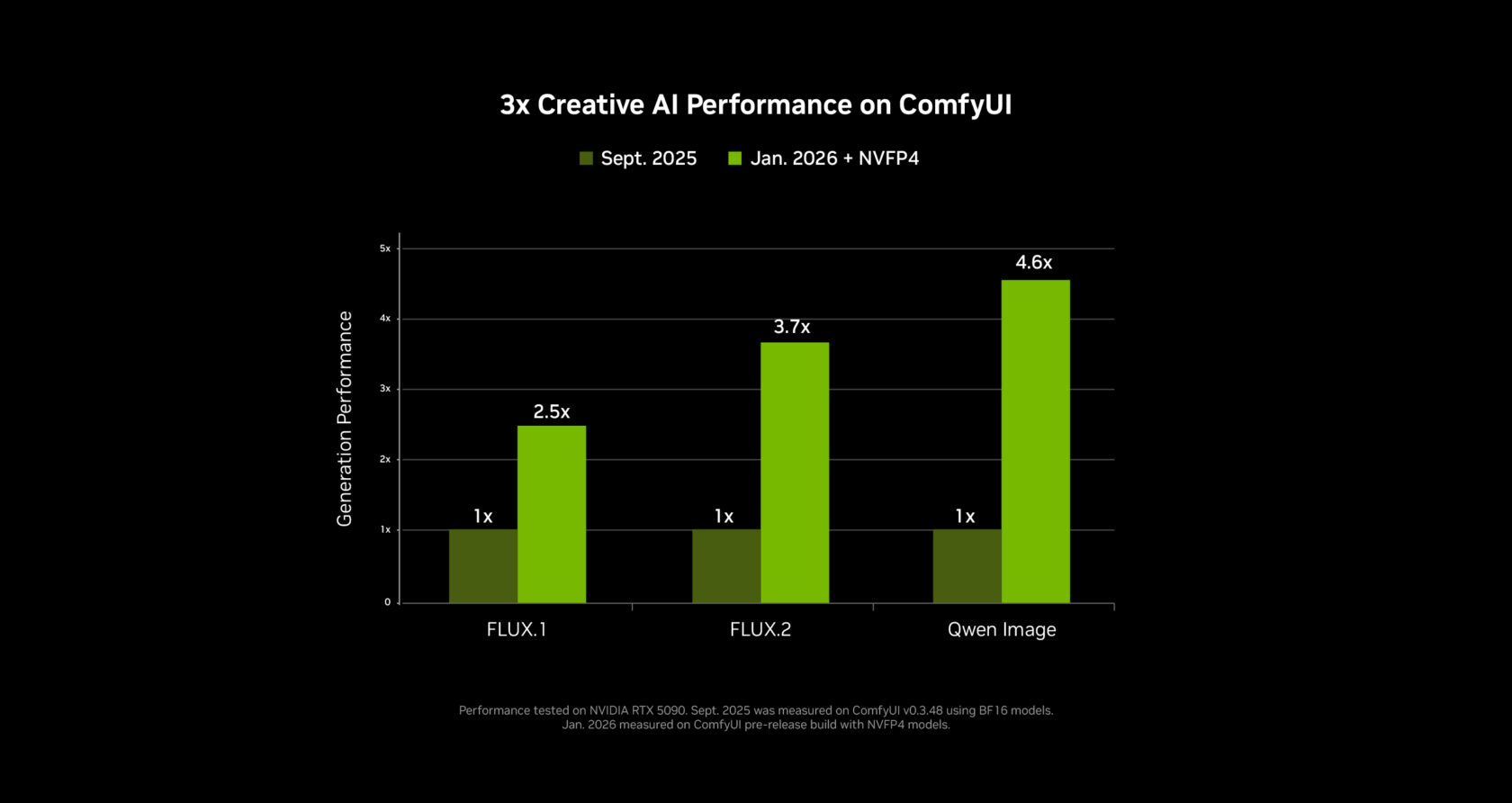

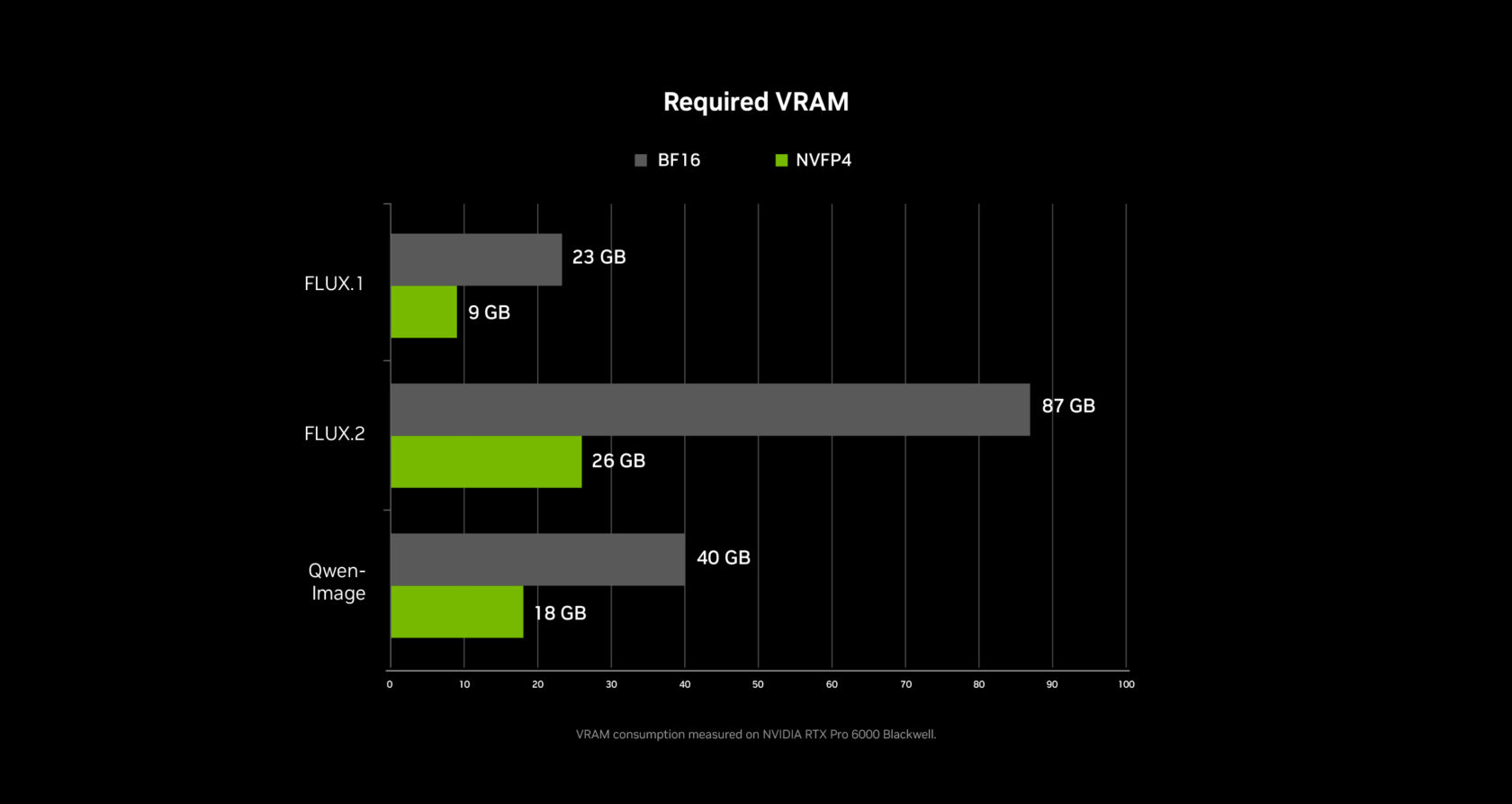

- As much as 3x efficiency and 60% discount in VRAM for video and picture generative AI through PyTorch-CUDA optimizations and native NVFP4/FP8 precision help in ComfyUI.

- RTX Video Tremendous Decision integration in ComfyUI, accelerating 4K video technology.

- NVIDIA NVFP8 optimizations for the open weights launch of Lightricks’ state-of-the-art LTX-2 audio-video technology mannequin.

- A brand new video technology pipeline for producing 4K AI video utilizing a 3D scene in Blender to exactly management outputs.

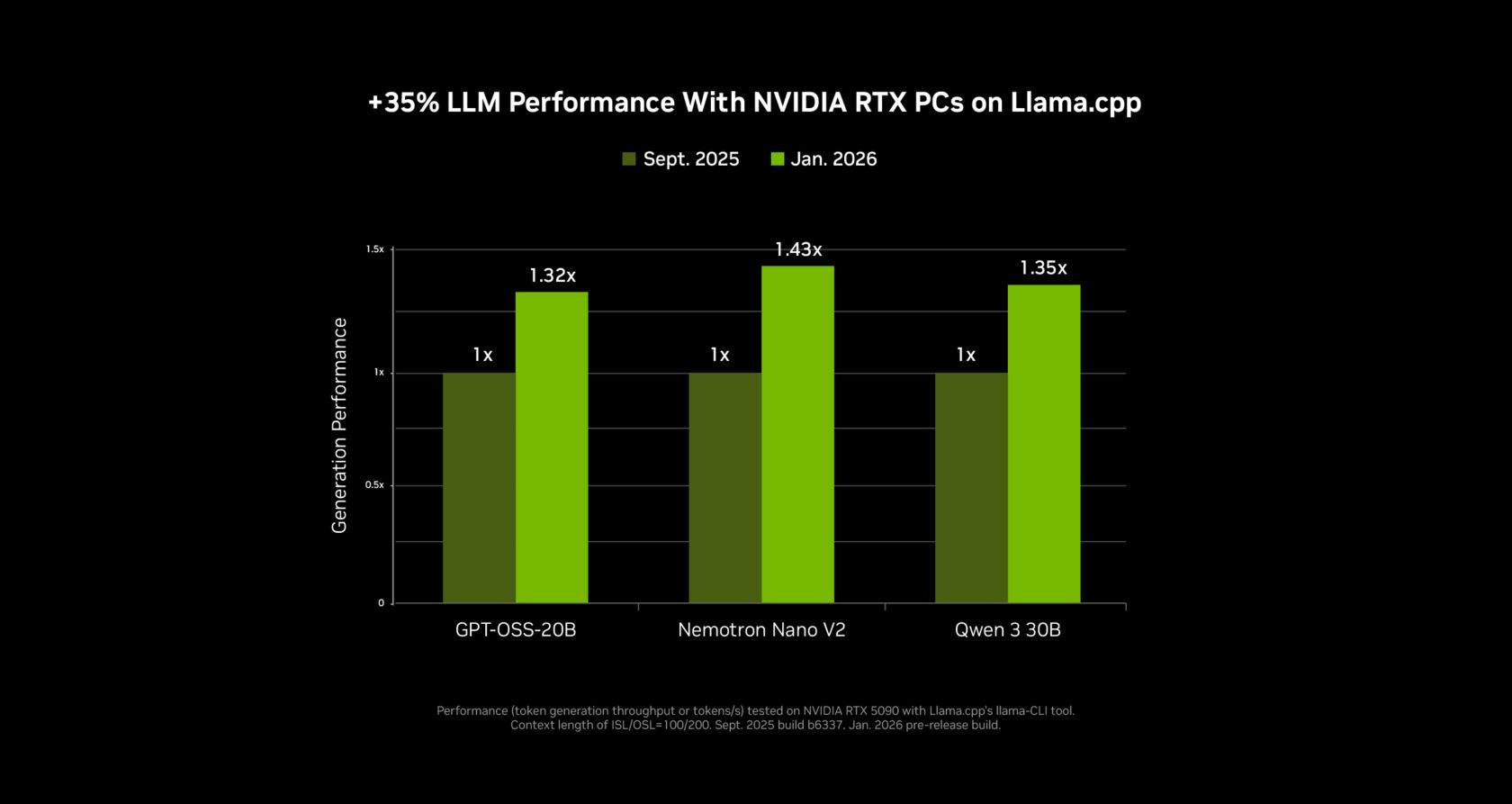

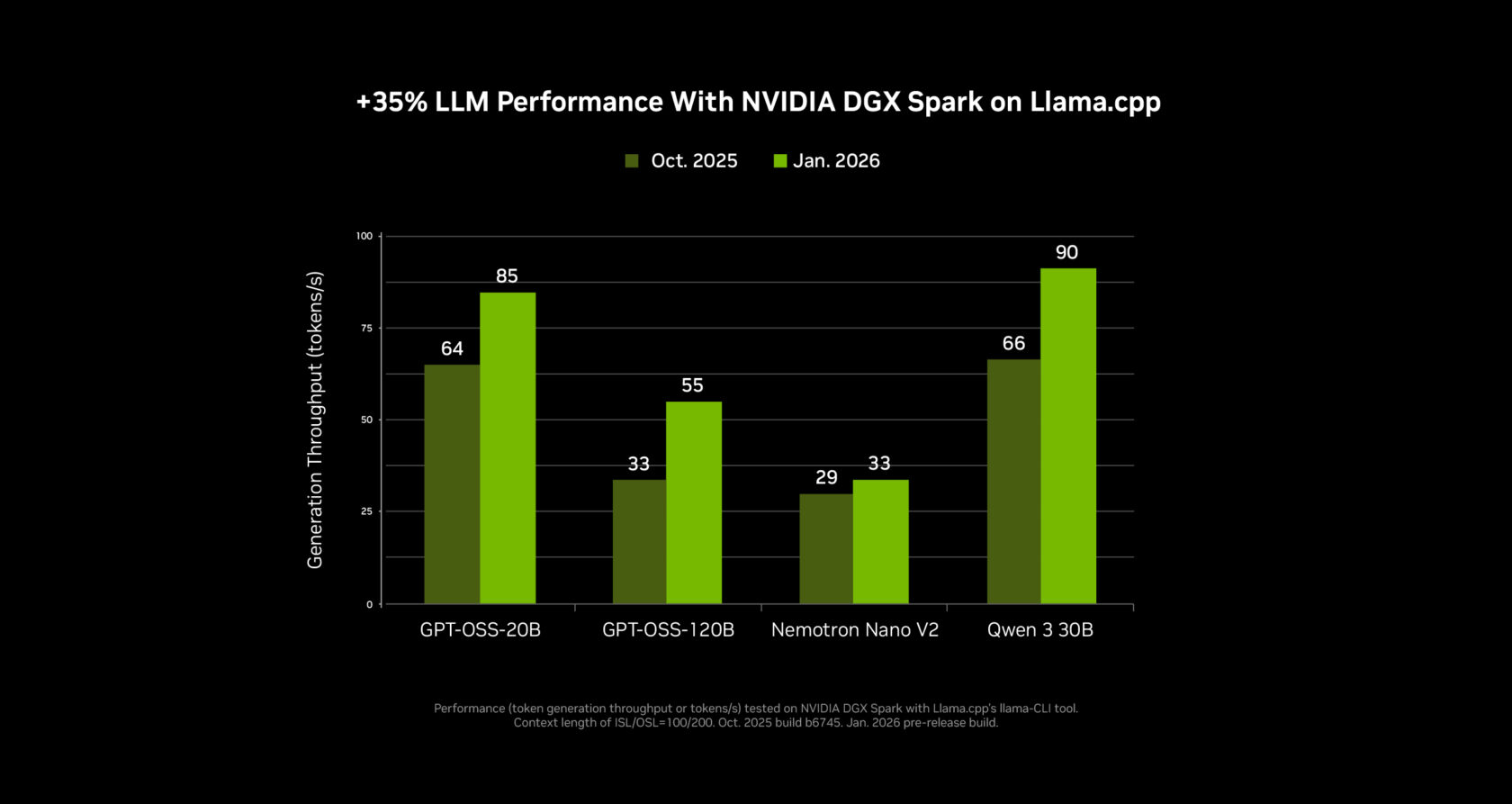

- As much as 35% sooner inference efficiency for SLMs through Ollama and llama.cpp.

- RTX acceleration for Nexa.ai’s Hyperlink new video search functionality.

These developments will permit customers to seamlessly run superior video, picture and language AI workflows with the privateness, safety and low latency provided by native RTX AI PCs.

Generate Movies 3x Quicker and in 4K on RTX PCs

Generative AI could make wonderful movies, however on-line instruments might be tough to manage with simply prompts. And attempting to generate 4K movies is close to unattainable, as most fashions are too giant to suit on PC VRAM.

At the moment, NVIDIA is introducing an RTX-powered video technology pipeline that allows artists to achieve correct management over their generations whereas producing movies 3x sooner and upscaling them to 4K — solely utilizing a fraction of the VRAM.

This video pipeline permits rising artists to create a storyboard, flip it into photorealistic keyframes after which flip these keyframes right into a high-quality, 4K video. The pipeline is cut up into three blueprints that artists can combine and match or modify to their wants:

- A 3D object generator that creates belongings for scenes.

- A 3D-guided picture generator that permits customers to set their scene in Blender and generate photorealistic keyframes from it.

- A video generator that follows a consumer’s begin and finish key frames to animate their video, and makes use of NVIDIA RTX Video expertise to upscale it to 4K

This pipeline is feasible by the groundbreaking launch of the brand new LTX-2 mannequin from Lightricks, obtainable for obtain at this time.

A significant milestone for native AI video creation, LTX-2 delivers outcomes that stand toe-to-toe with main cloud-based fashions whereas producing as much as 20 seconds of 4K video with spectacular visible constancy. The mannequin options built-in audio, multi-keyframe help and superior conditioning capabilities enhanced with controllability low-rank variations — giving creators cinematic-level high quality and management with out counting on cloud dependencies.

Below the hood, the pipeline is powered by ComfyUI. Over the previous few months, NVIDIA has labored intently with ComfyUI to optimize efficiency by 40% on NVIDIA GPUs, and the most recent replace provides help for the NVFP4 and NVFP8 knowledge codecs. All mixed, efficiency is 3x sooner and VRAM is decreased by 60% with RTX 50 Collection’ NVFP4 format, and efficiency is 2x sooner and VRAM is decreased by 40% with NVFP8.

NVFP4 and NVFP8 checkpoints are actually obtainable for a number of the prime fashions straight in ComfyUI. These fashions embrace LTX-2 from Lightricks, FLUX.1 and FLUX.2 from Black Forest Labs, and Qwen-Picture and Z-Picture from Alibaba. Obtain them straight in ComfyUI, with further mannequin help coming quickly.

As soon as a video clip is generated, movies are upscaled to 4K in simply seconds utilizing the brand new RTX Video node in ComfyUI. This upscaler works in actual time, sharpens edges and cleans up compression artifacts for a transparent last picture. RTX Video might be obtainable in ComfyUI subsequent month.

To assist customers push past the bounds of GPU reminiscence, NVIDIA has collaborated with ComfyUI to enhance its reminiscence offload characteristic, often known as weight streaming. With weight streaming enabled, ComfyUI can use system RAM when it runs out of VRAM, enabling bigger fashions and extra advanced multistage node graphs on mid-range RTX GPUs.

The video technology workflow might be obtainable for obtain subsequent month, with the newly launched open weights of the LTX-2 Video Mannequin and ComfyUI RTX updates obtainable now.

A New Method to Search PC Recordsdata and Movies

File looking on PCs has been the identical for many years. It nonetheless largely depends on file names and spotty metadata, which makes monitoring down that one doc from final 12 months method tougher than it needs to be.

Hyperlink — Nexa.ai’s native search agent — turns RTX PCs right into a searchable information base that may reply questions in pure language with inline citations. It may scan and index paperwork, slides, PDFs and pictures, so searches might be pushed by concepts and content material as an alternative of file title guesswork. All knowledge is processed regionally and stays on the consumer’s PC for privateness and safety. Plus, it’s RTX-accelerated, taking 30 seconds per gigabyte to index textual content and picture recordsdata and three seconds for a response on a RTX 5090 GPU, in contrast with an hour per gigabyte to index recordsdata and 90 seconds for a response on CPUs.

At CES, Nexa.ai is unveiling a brand new beta model of Hyperlink that provides help for video content material, enabling customers to look by means of their movies for objects, actions and speech. That is best for customers starting from video artists searching for B-roll to players who need to discover that point they received a battle royale match to share with their associates.

For these fascinated about attempting the Hyperlink personal beta, join entry on this webpage. Entry will roll out beginning this month.

Small Language Fashions Get 35% Quicker

NVIDIA has collaborated with the open‑supply group to ship main efficiency features for SLMs on RTX GPUs and the NVIDIA DGX Spark desktop supercomputer utilizing Llama.cpp and Ollama. The most recent modifications are particularly useful for mixture-of-experts fashions, together with the brand new NVIDIA Nemotron 3 household of open fashions.

SLM inference efficiency has improved by 35% and 30% for llama.cpp and Ollama, respectively, over the previous 4 months. These updates can be found now, and a quality-of-life improve for llama.cpp additionally hurries up LLM loading instances.

These speedups might be obtainable within the subsequent replace of LM Studio, and might be coming quickly to agentic apps like the brand new MSI AI Robotic app. The MSI AI Robotic app, which additionally takes benefit of the Llama.cpp optimizations, lets customers management their MSI gadget settings and can incorporate the most recent updates in an upcoming launch.

NVIDIA Broadcast 2.1 Brings Digital Key Mild to Extra PC Customers

The NVIDIA Broadcast app improves the standard of a consumer’s PC microphone and webcam with AI results, best for livestreaming and video conferencing.

Model 2.1 updates the Digital Key Mild impact to enhance efficiency — making it obtainable to RTX 3060 desktop GPUs and better — deal with extra lighting situations, provide broader colour temperature management and use an up to date HDRi base map for a two‑key‑gentle type typically seen in skilled streams. Obtain the NVIDIA Broadcast replace at this time.

Remodel an At-House Artistic Studio Into an AI Powerhouse With DGX Spark

As new and more and more succesful AI fashions arrive on PC every month, developer curiosity in additional highly effective and versatile native AI setups continues to develop. DGX Spark — a compact AI supercomputer that matches on customers’ desks and pairs seamlessly with a main desktop or laptop computer — permits experimenting, prototyping and working superior AI workloads alongside an current PC.

Spark is right for these fascinated about testing out LLMs or prototyping agentic workflows, or for artists who need to generate belongings in parallel to their workflow in order that their foremost PC remains to be obtainable for enhancing.

At CES, NVIDIA is unveiling main AI efficiency updates to Spark, delivering as much as 2.6x sooner efficiency because it launched just below three months in the past.

New DGX Spark playbooks are additionally obtainable, together with one for speculative decoding and one other to fine-tune fashions with two DGX Spark modules.

Plug in to NVIDIA AI PC on Fb, Instagram, TikTok and X — and keep knowledgeable by subscribing to the RTX AI PC e-newsletter. Comply with NVIDIA Workstation on LinkedIn and X.

See discover relating to software program product info.