At this week’s AI Infrastructure Summit in Silicon Valley, NVIDIA’s VP of Accelerated Computing Ian Buck unveiled a daring new imaginative and prescient: the transformation of conventional knowledge facilities into totally built-in AI factories.

As a part of this initiative, NVIDIA is creating reference designs to be shared with companions and enterprises worldwide — providing an NVIDIA Omniverse Blueprint for constructing high-performance, energy-efficient infrastructure optimized for the age of AI reasoning.

Already, NVIDIA is collaborating with scores of firms throughout each layer of the stack, from constructing design and grid integration to energy, cooling and orchestration.

It’s a pure evolution for the corporate, scaling past chips and methods into a brand new class of business merchandise — so complicated and interconnected that no single participant can construct them alone.

NVIDIA, together with a deep bench of business and know-how companions, is reactivating many years of infrastructure experience to construct this new class of AI factories.

Amongst these companions, Jacobs serves because the design integrator, serving to to coordinate the bodily and digital layers of the infrastructure to make sure seamless orchestration.

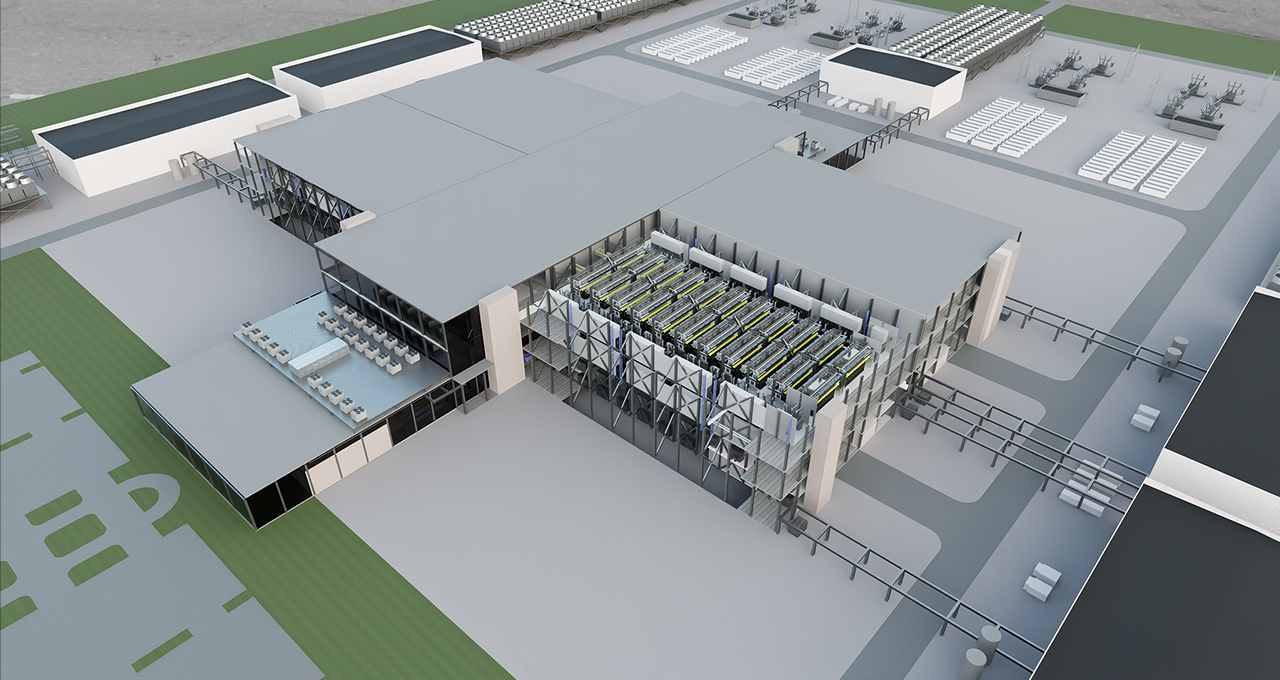

The embodiment of the reference design might be a digital twin of the AI manufacturing unit. This digital twin integrates the IT methods inside the information middle with the operational know-how for energy and cooling methods inside and out of doors the information middle.

The brand new initiative expands the digital twin to combine native energy technology, power storage methods, cooling know-how and AI brokers for operations.

Longtime collaborators in energy and cooling — Schneider Electrical, Siemens and Vertiv — have been instrumental in shaping resilient, high-efficiency environments tailor-made for AI-scale workloads.

Siemens and Siemens Vitality performs a crucial function in on-premises energy supply, supporting the necessity for quickly deployable, steady energy to fulfill the gigawatt-scale power calls for of those amenities. GE Vernova collaborates in energy technology and electrification to the rack.

These firms, together with a rising ecosystem of specialists in infrastructure design and simulation, and orchestration — together with Cadence, Emerald AI, E Tech Group, phaidra.ai, PTC, Schneider Electrical with ETAP, Siemens and Vertech — are serving to NVIDIA activate a system-level transformation.

On the coronary heart of this imaginative and prescient lies a basic problem: how one can optimize each watt of power that enters the ability in order that it contributes on to intelligence technology.

In right now’s knowledge middle paradigm, buildings are sometimes designed independently of the compute platforms they home, resulting in inefficiencies in energy distribution, cooling and system orchestration.

NVIDIA and its companions are flipping that mannequin.

By designing the infrastructure and know-how stack in tandem, the corporate permits true system-level optimization — the place energy, cooling, compute and software program are engineered as a unified complete.

Simulation performs a central function on this shift.

Corporations will have the ability to share simulation-ready property, permitting designers to mannequin elements in Omniverse utilizing AI manufacturing unit digital twins even earlier than they’re bodily obtainable.

These digital twins not solely optimize AI factories earlier than they’re constructed — in addition they assist handle them as soon as they’re operational.

By adopting the OpenUSD framework, the simulation platform can precisely mannequin each side of a facility’s operations, from energy and cooling to networking infrastructure. This open and extensible strategy permits for the creation of bodily correct property, which in flip results in the design of smarter, extra dependable amenities.

And the complexity doesn’t cease on the facility partitions.

AI factories should be plugged into broader methods — energy grids, water provides and transportation networks — that require cautious coordination and simulation all through their lifecycle to make sure reliability and scalability.

This work has already begun.

Earlier this 12 months, NVIDIA launched an Omniverse Blueprint for AI manufacturing unit digital twins. This blueprint connects platforms like Cadence and ETAP, permitting companions to plug of their core instruments to mannequin gigawatt-scale amenities earlier than a single bodily AI manufacturing unit website has even been chosen.

Extra just lately, the corporate expanded its ecosystem with integrations from Delta, Jacobs, Siemens and Siemens Vitality, enabling unified simulation of energy, cooling and networking methods.

When this blueprint is full subsequent 12 months, it’s going to permit companions to plug into the system through software programming interfaces and simulation-ready digital property, enabling real-time collaboration and orchestration throughout the complete lifecycle — from design to deployment to operation.

Because of this work, the place conventional amenities operated in isolation, AI factories might be designed for composability, resilience and scale.

Name to Motion: Be a part of builders, trade leaders, and innovators at NVIDIA GTC Washington, D.C., to discover the most recent breakthroughs in AI infrastructure and be taught from knowledgeable classes, hands-on coaching, and accomplice showcases.

See discover concerning software program product info.