In enterprise AI, understanding and dealing throughout a number of languages is now not elective — it’s important for assembly the wants of staff, prospects and customers worldwide.

Multilingual info retrieval — the power to look, course of and retrieve information throughout languages — performs a key position in enabling AI to ship extra correct and globally related outputs.

Enterprises can develop their generative AI efforts into correct, multilingual techniques utilizing NVIDIA NeMo Retriever embedding and reranking NVIDIA NIM microservices, which at the moment are accessible on the NVIDIA API catalog. These fashions can perceive info throughout a variety of languages and codecs, resembling paperwork, to ship correct, context-aware outcomes at large scale.

With NeMo Retriever, companies can now:

- Extract information from massive and various datasets for extra context to ship extra correct responses.

- Seamlessly join generative AI to enterprise knowledge in most main international languages to develop person audiences.

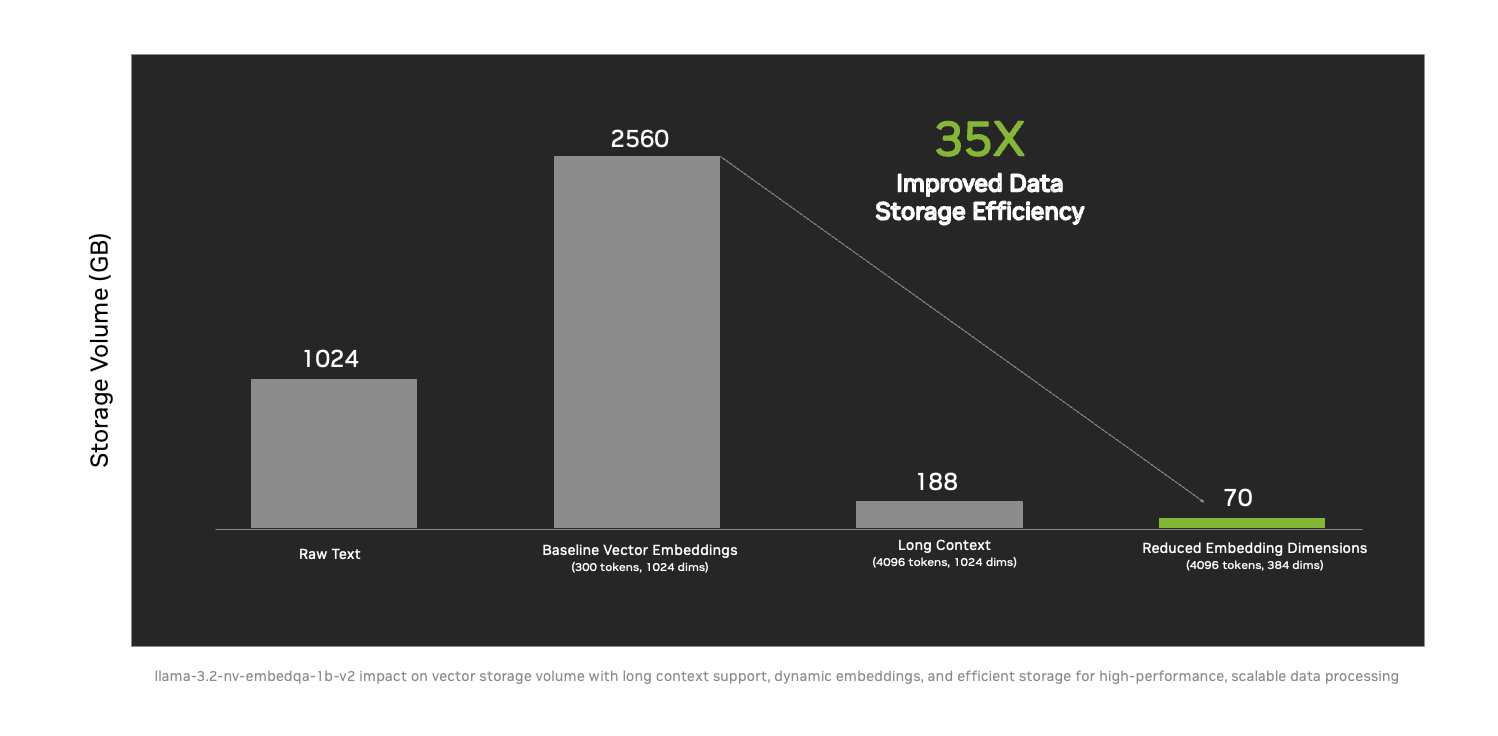

- Ship actionable intelligence at better scale with 35x improved knowledge storage effectivity by way of new methods resembling lengthy context help and dynamic embedding sizing.

Main NVIDIA companions like DataStax, Cohesity, Cloudera, Nutanix, SAP, VAST Information and WEKA are already adopting these microservices to assist organizations throughout industries securely join customized fashions to various and huge knowledge sources. By utilizing retrieval-augmented era (RAG) methods, NeMo Retriever permits AI techniques to entry richer, extra related info and successfully bridge linguistic and contextual divides.

Wikidata Speeds Information Processing From 30 Days to Underneath Three Days

In partnership with DataStax, Wikimedia has carried out NeMo Retriever to vector-embed the content material of Wikipedia, serving billions of customers. Vector embedding — or “vectorizing” — is a course of that transforms knowledge right into a format that AI can course of and perceive to extract insights and drive clever decision-making.

Wikimedia used the NeMo Retriever embedding and reranking NIM microservices to vectorize over 10 million Wikidata entries into AI-ready codecs in below three days, a course of that used to take 30 days. That 10x speedup permits scalable, multilingual entry to one of many world’s largest open-source information graphs.

This groundbreaking mission ensures real-time updates for a whole lot of hundreds of entries which are being edited day by day by hundreds of contributors, enhancing international accessibility for builders and customers alike. With Astra DB’s serverless mannequin and NVIDIA AI applied sciences, the DataStax providing delivers near-zero latency and distinctive scalability to help the dynamic calls for of the Wikimedia neighborhood.

DataStax is utilizing NVIDIA AI Blueprints and integrating the NVIDIA NeMo Customizer, Curator, Evaluator and Guardrails microservices into the LangFlow AI code builder to allow the developer ecosystem to optimize AI fashions and pipelines for his or her distinctive use circumstances and assist enterprises scale their AI functions.

Language-Inclusive AI Drives World Enterprise Influence

NeMo Retriever helps international enterprises overcome linguistic and contextual obstacles and unlock the potential of their knowledge. By deploying sturdy, AI options, companies can obtain correct, scalable and high-impact outcomes.

NVIDIA’s platform and consulting companions play a essential position in guaranteeing enterprises can effectively undertake and combine generative AI capabilities, resembling the brand new multilingual NeMo Retriever microservices. These companions assist align AI options to a corporation’s distinctive wants and sources, making generative AI extra accessible and efficient. They embrace:

- Cloudera plans to develop the mixing of NVIDIA AI within the Cloudera AI Inference Service. Presently embedded with NVIDIA NIM, Cloudera AI Inference will embrace NVIDIA NeMo Retriever to enhance the pace and high quality of insights for multilingual use circumstances.

- Cohesity launched the trade’s first generative AI-powered conversational search assistant that makes use of backup knowledge to ship insightful responses. It makes use of the NVIDIA NeMo Retriever reranking microservice to enhance retrieval accuracy and considerably improve the pace and high quality of insights for numerous functions.

- SAP is utilizing the grounding capabilities of NeMo Retriever so as to add context to its Joule copilot Q&A characteristic and knowledge retrieved from customized paperwork.

- VAST Information is deploying NeMo Retriever microservices on the VAST Information InsightEngine with NVIDIA to make new knowledge immediately accessible for evaluation. This accelerates the identification of enterprise insights by capturing and organizing real-time info for AI-powered selections.

- WEKA is integrating its WEKA AI RAG Reference Platform (WARRP) structure with NVIDIA NIM and NeMo Retriever into its low-latency knowledge platform to ship scalable, multimodal AI options, processing a whole lot of hundreds of tokens per second.

Breaking Language Limitations With Multilingual Info Retrieval

Multilingual info retrieval is important for enterprise AI to satisfy real-world calls for. NeMo Retriever helps environment friendly and correct textual content retrieval throughout a number of languages and cross-lingual datasets. It’s designed for enterprise use circumstances resembling search, question-answering, summarization and suggestion techniques.

Moreover, it addresses a big problem in enterprise AI — dealing with massive volumes of enormous paperwork. With long-context help, the brand new microservices can course of prolonged contracts or detailed medical data whereas sustaining accuracy and consistency over prolonged interactions.

These capabilities assist enterprises use their knowledge extra successfully, offering exact, dependable outcomes for workers, prospects and customers whereas optimizing sources for scalability. Superior multilingual retrieval instruments like NeMo Retriever could make AI techniques extra adaptable, accessible and impactful in a globalized world.

Availability

Builders can entry the multilingual NeMo Retriever microservices, and different NIM microservices for info retrieval, by way of the NVIDIA API catalog, or a no-cost, 90-day NVIDIA AI Enterprise developer license.

Study extra in regards to the new NeMo Retriever microservices and find out how to use them to construct environment friendly info retrieval techniques.